BERT language model: BERT is an open-source machine learning framework for natural language processing (NLP) that helps computers understand ambiguous language in text. It uses surrounding text to establish context and is based on the deep learning model called Transformers, in which every output element is connected to every input element. The weightings between them are dynamically calculated based on their connection, which is called attention in NLP.

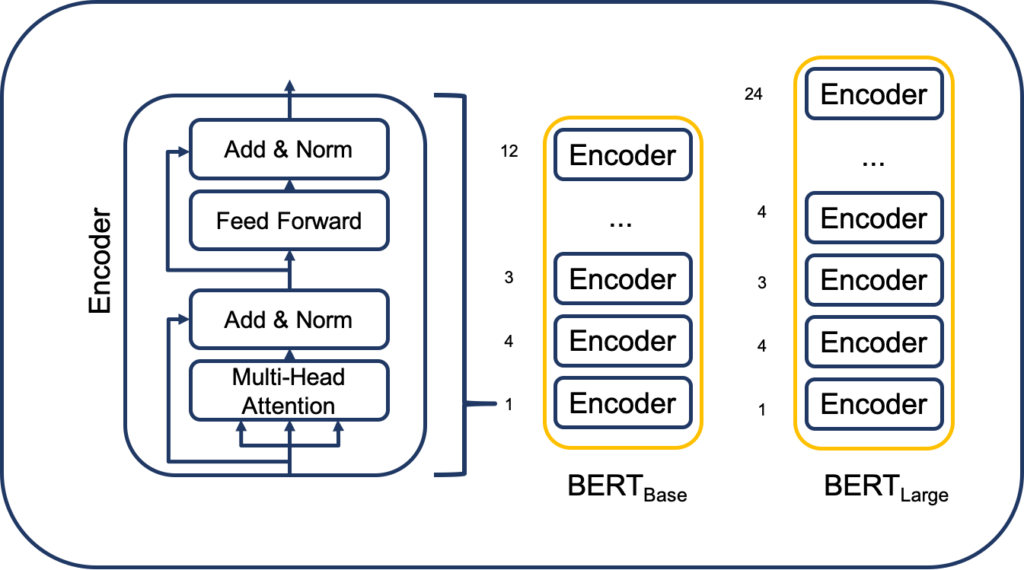

BERT stands for Bidirectional Encoder Representations from Transformers. It reads text input in both directions at once, which is enabled by the introduction of Transformers. It was pre-trained using text from Wikipedia and can be fine-tuned with question-and-answer datasets.

The pre-training of BERT is based on two different but related NLP tasks: Masked Language Modeling and Next Sentence Prediction. Masked Language Model (MLM) training hides a word in a sentence, and the program predicts what word has been hidden (masked) based on the hidden word’s context. Next Sentence Prediction training aims to predict whether two given sentences have a logical, sequential connection or whether their relationship is just random.

Table of Contents

Background

In 2017, Google introduced Transformers. At that time, recurrent neural networks (RNN) and convolutional neural networks (CNN) were the primary models used for natural language processing (NLP) tasks. While these models were competent, they had the disadvantage of requiring sequences of data to be processed in a fixed order. On the other hand, Transformers can process data in any order, which makes them more efficient. With Transformers, it became possible to train on larger amounts of data, which facilitated the creation of pre-trained models like BERT.

In 2018, Google launched BERT, which was open-sourced. BERT achieved groundbreaking results in 11 natural language understanding tasks, including sentiment analysis, semantic role labeling, sentence classification, and the disambiguation of polysemous words. BERT surpassed previous language models such as word2vec and GloVe in interpreting context and polysemous words. BERT is capable of addressing ambiguity, which is the greatest challenge to natural language understanding according to research scientists in the field.

Google announced in October 2019 that BERT would be applied to their American production search algorithms. BERT is expected to affect 10% of Google search queries. Organizations are advised not to optimize content for BERT, as it aims to provide a natural-feeling search experience. Users are recommended to keep queries and content focused on the natural subject matter and user experience.

Did you know that in December 2019, BERT was implemented in over 70 different languages? That’s quite impressive, isn’t it?

Read Also: What is an LLM?

How BERT works: BERT language model

Natural Language Processing (NLP) techniques aim to understand human language, which is spoken naturally. One such technique that has gained immense popularity is BERT (Bidirectional Encoder Representations from Transformers). BERT is a pre-trained model that can predict a word in a blank space, and it is unique because it requires no labeled training data. This means that it can learn from an unlabeled, plain text corpus, such as the entirety of the English Wikipedia or the Brown Corpus.

BERT’s pre-training provides a base layer of knowledge, which can be fine-tuned to a user’s specifications. This process, called transfer learning, allows BERT to adapt to new content and queries. Google’s research on Transformers makes BERT possible, and the transformer is the model’s core component that enables it to understand context and ambiguity in language.

The transformer processes every word in a sentence about all the other words in that sentence, rather than processing words one at a time. This enables BERT to understand the complete context of a word and, therefore, better understand what the searcher intends. This method is in contrast to word embedding models, such as GloVe and word2vec, which map every word to a vector, representing only one dimension of the word’s meaning.

Read also: BharatGPT Unveiled: CoRover.ai’s Breakthrough in Conversational AI

While word embedding models are adept at many general NLP tasks, they fail to answer questions predictively because all words are fixed to a vector or meaning. BERT uses masked language modeling, in which the word in focus does not “see itself” and has no fixed meaning independent of its context. BERT must identify the masked word based solely on context. Therefore, in BERT, words are defined by their surroundings and not by a pre-fixed identity, as English linguist John Rupert Firth once said, “You shall know a word by the company it keeps.”

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a revolutionary natural language processing (NLP) technique that has transformed how machines understand human language. It uses a self-attention mechanism that is made possible by bidirectional Transformers to process text in a more human-like way.

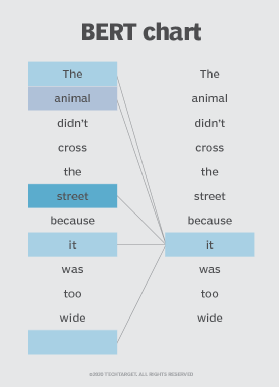

One of the significant contributions of BERT is that it can understand the context of a word in a sentence, i.e., the meaning of a word can change as the sentence develops. This is because the more words that are added to a sentence or phrase, the more ambiguous the focus word becomes. BERT resolves this ambiguity by reading bidirectionally, taking into account all other words in a sentence to eliminate left-to-right momentum bias.

For instance, if we take the example of a search query, BERT can determine which prior word in a sentence is being referred to by the word “is.” It uses its attention mechanism to weigh the options and chooses the word with the highest calculated score as the correct association. This understanding helps BERT provide more precise search results, making it an invaluable tool for natural language processing in various applications.

What is BERT used for?

BERT is currently being used at Google to optimize the interpretation of user search queries. BERT excels at several functions that make this possible, including:

- Sequence-to-sequence-based language generation tasks such as:

- Question answering

- Abstract summarization

- Sentence prediction

- Conversational response generation

- Natural language understanding tasks such as:

- Polysemy and Coreference (words that sound or look the same but have different meanings) resolution

- Word sense disambiguation

- Natural language inference

- Sentiment classification

BERT is expected to have a large impact on voice search as well as text-based search, which has been error-prone with Google’s NLP techniques to date. BERT is also expected to drastically improve international SEO, because its proficiency in understanding context helps it interpret patterns that different languages share without having to understand the language completely. More broadly, BERT has the potential to drastically improve artificial intelligence systems across the board.

BERT is open source, meaning anyone can use it. Google claims that users can train a state-of-the-art question and answer system in just 30 minutes on a cloud tensor processing unit (TPU), and in a few hours using a graphic processing unit (GPU). Many other organizations, research groups and separate factions of Google are fine-tuning the BERT model architecture with supervised training to either optimize it for efficiency (modifying the learning rate, for example) or specialize it for certain tasks by pre-training it with certain contextual representations. Some examples include:

Introducing a Symphony of Specialized BERTs – where language meets innovation!

- patentBERT: Imagine a BERT model on a mission – fine-tuned to dance through the intricate world of patent classification. It’s not just language; it’s a linguistic maestro for patents!

- docBERT: Ever wished for a BERT that speaks the language of document classification? Well, docBERT is here to make your organizational dreams come true – turning documents into a well-orchestrated symphony of understanding.

- bioBERT: Step into the realm of biomedicine with bioBERT – a pre-trained maestro in the language of life sciences. It’s not just a model; it’s the virtuoso of biomedical text mining, decoding the secrets hidden in the language of life.

- VideoBERT: Ever wondered if a model could capture the magic of visual and linguistic poetry? VideoBERT is here to serenade you with a joint visual-linguistic ballet, gracefully learning from the vast tales of unlabeled data on YouTube.

- SciBERT: Enter the scholarly arena with SciBERT – a pre-trained BERT model designed exclusively for scientific text. It’s not just a language model; it’s a scholar, deciphering the complexities of scientific language with finesse.

- G-BERT: Get ready for a revolutionary approach! G-BERT starts with medical codes, adds a dash of hierarchical representations using graph neural networks (GNN), and fine-tunes the magic for making personalized medical recommendations. It’s not just a model; it’s your medical companion.

- TinyBERT by Huawei: Picture this – a smaller, more nimble BERT, learning from the wisdom of its bigger sibling. TinyBERT is the prodigy, performing the art of transformer distillation to dazzle you with promising results, all while being 7.5 times smaller and 9.4 times faster at inference.

- DistilBERT by HuggingFace: Meet the maestro of efficiency! DistilBERT is the leaner, faster, and budget-friendly sibling of BERT. It transforms, shedding certain architectural aspects while retaining the essence of language understanding, bringing you the symphony of efficiency.

- In this grand ensemble of specialized BERTs, each model is not just a tool but a virtuoso, mastering the language of its unique domain. It’s not just about understanding words; it’s about orchestrating the language of innovation and discovery. 🚀📚