A transformer model is an architecture within neural networks that can automatically convert one type of input into another type of output. The term originated from a 2017 Google paper, showcasing its ability to train a neural network for translating English to French with increased accuracy and significantly reduced training time compared to other neural networks.

The versatility of transformers extends beyond translation tasks; they have proven effective in generating text, images, and instructions for robots. This adaptability has led to their utilization in multimodal AI, where they model relationships between different types of data, such as converting natural language instructions into images or robot commands.

Transformers play a pivotal role in various applications of large language models (LLMs), including ChatGPT, Google Search, Dall-E, and Microsoft Copilot. They have become a fundamental component in natural language processing applications due to their superior performance compared to previous methodologies.

Researchers have also discovered the potential of transformer models in diverse domains, such as chemistry, biology, and healthcare. These models can learn to work with chemical structures, predict protein folding, and analyze medical data at scale, showcasing their versatility and utility across different fields.

An essential aspect of transformers lies in their utilization of attention mechanisms. This concept emphasizes the significance of related words in providing context for a given word or token, whether describing an image segment, a protein structure, or a speech phoneme.

The concept of attention has been present in AI processing since the 1990s, but it was the groundbreaking work of a Google team in 2017 that demonstrated its direct application in encoding word meanings and language structures. This innovation eliminated the need for additional encoding steps using dedicated neural networks and unlocked the potential to model virtually any type of information, leading to significant breakthroughs in AI development over recent years.

Table of Contents

What can a transformer model do?

In the field of deep learning, transformers are gaining popularity and replacing the previously dominant neural network architectures like recurrent neural networks (RNNs) and convolutional neural networks (CNNs). RNNs were known for their ability to process sequential data like speech, sentences, and code, but they were limited to shorter strings and struggled with longer sequences. Even with the introduction of long short-term memory, RNNs exhibited slower processing speeds. In contrast, transformers are proving to be more efficient alternatives, capable of processing longer sequences and handling each word or token simultaneously, which enhances scalability.

On the other hand, CNNs were designed for parallel processing of data, particularly in analyzing multiple regions of a photo simultaneously to identify similarities in features like lines, shapes, and textures. These networks excelled at comparing neighboring areas. However, transformer models are now demonstrating superior performance in comparing regions that might be spatially distant from each other. Additionally, transformers exhibit proficiency in working with unlabeled data, a significant advantage in various applications.

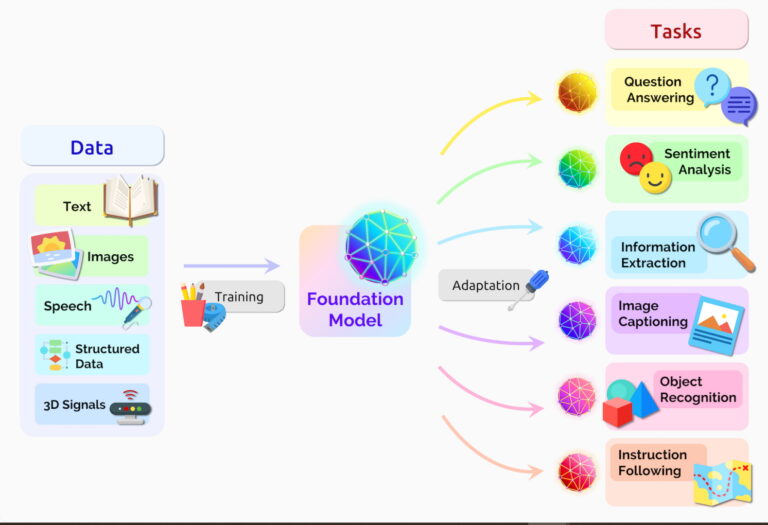

Transformers also showcase their ability to efficiently learn the meaning of text by analyzing extensive sets of unlabeled data. This capability allows researchers to scale transformers to handle hundreds of billions or even trillions of features. In practical terms, pre-trained models developed with unlabeled data serve as a foundational starting point, subsequently refined for specific tasks using labeled data. This two-step process not only streamlines expertise requirements but also demands less processing power for the secondary refinement, making transformers a highly effective and scalable solution in the ever-evolving landscape of deep learning.

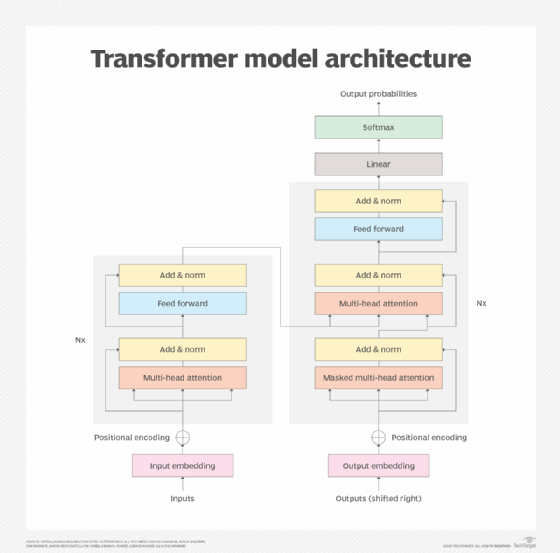

Transformer model architecture

A transformer architecture is comprised of both an encoder and a decoder, working in tandem. The pivotal element in this structure is the attention mechanism, which allows transformers to encode the meaning of words based on the perceived importance of other words or tokens. This unique feature enables transformers to process all words or tokens simultaneously, facilitating faster performance and contributing to the expansion of increasingly larger Large Language Models (LLMs).

The attention mechanism operates by having the encoder block transform each word or token into vectors that are further weighted by the influence of other words. To illustrate, consider the two sentences:

1. He poured the pitcher into the cup and filled it.

2. He poured the pitcher into the cup and emptied it.

In these sentences, the meaning of the word “it” would be weighted differently due to the change from “filled” to “emptied.” The attention mechanism discerns this difference, connecting “it” to the cup being filled in the first sentence and to the pitcher being emptied in the second sentence.

The decoder reverses this process in the target domain. Originally designed for translating English to French, the same mechanism proves versatile in translating short English questions and instructions into more extended answers. Conversely, it can condense a lengthy article into a succinct summary. This flexibility in decoding makes transformers a powerful tool not only for language translation but also for tasks that involve generating varied responses based on given inputs.

Transformer model training

Training a transformer, a type of neural network architecture that has revolutionized natural language processing (NLP) and related tasks, involves two crucial phases. In the first phase, the transformer model is trained on a substantial amount of unlabeled data, often referred to as pre-training. During this phase, the model tries to grasp the structure of language or phenomena, such as protein folding, by learning how nearby elements interact and influence each other. This process, known as self-attention, is both computationally expensive and energy-intensive, often requiring millions of dollars to train the largest models.

Once pre-trained, the transformer model is ready to be fine-tuned for specific tasks such as text classification, language translation, or question-answering. This second phase demands less expertise and processing power, making it a more accessible step. For instance, a technology company might fine-tune a chatbot to address varying customer service queries based on user knowledge, while a law firm might adjust a model for contract analysis. Similarly, a development team may tailor the model to its unique coding conventions to improve code quality and reduce errors.

Proponents of transformers argue that the substantial expense incurred during the pre-training of larger general-purpose models pays off in the long run. This investment saves time and money when customizing the model for diverse use cases. The size of a model, often measured by the number of features or parameters, is sometimes considered a proxy for performance. However, it’s crucial to note that the model’s size doesn’t inherently correlate with its performance or utility.

Recent research by leading tech companies and academic institutions has challenged the notion that larger models necessarily equate to better performance. For example, Google researchers demonstrated that a mixture-of-experts technique can make the training of Large Language Models (LLMs) up to seven times more efficient than other models. Meanwhile, Meta’s Large Language Model Meta AI (Llama), with 13 billion parameters, outperformed a 175-billion-parameter generative pre-trained transformer (GPT) model on major benchmarks. Even a 65-billion-parameter variant of Llama matched the performance of models with over 500 billion parameters, showcasing that model efficiency and architecture play crucial roles in determining overall performance.

Transformer model applications

Transformers exhibit remarkable versatility, capable of being applied to a wide array of tasks that involve processing a given input to generate an output. Here are several examples of such use cases:

- Language Translation: Transforming text from one language to another, facilitating communication across linguistic barriers.

- Chatbot Development: Programming chatbots that are more engaging and capable of providing useful responses to user inquiries.

- Document Summarization: Generating concise summaries of lengthy documents, aiding in information extraction and comprehension.

- Text Generation: Creating extensive documents or articles based on brief prompts or topics provided.

- Chemical Structure Generation: Generating drug chemical structures based on specific prompts or requirements, facilitating drug discovery and development.

- Image Generation: Creating images from textual descriptions or prompts, enabling the generation of visual content.

- Image Captioning: Providing descriptive captions for images, enhancing accessibility and understanding.

- Robotic Process Automation (RPA): Generating scripts for automating repetitive tasks based on concise descriptions or requirements, streamlining workflow automation.

- Code Completion: Offering suggestions for code completion based on existing code snippets, aiding developers in writing efficient and error-free code.

These examples demonstrate the broad applicability of transformers across various domains, showcasing their effectiveness in automating tasks, enhancing communication, and facilitating creative endeavors.

Transformer model implementations

Implementations of transformers continue to evolve, showcasing improvements in size, versatility, and applicability across various domains such as medicine, science, and business applications. Here are some of the most promising transformer implementations:

- Google’s Bidirectional Encoder Representations from Transformers (BERT): Among the earliest Large Language Models (LLMs) based on transformers, BERT set the stage for subsequent advancements.

- OpenAI’s Generative Pre-trained Transformers (GPT) Series: OpenAI’s GPT series, including iterations like GPT-2, GPT-3, GPT-3.5, and GPT-4, has made significant strides in transformer-based language modeling. Additionally, ChatGPT specializes in conversational AI.

- Meta’s Large Language Model Meta AI (Llama): Despite its smaller size, Llama achieves comparable performance to models ten times its size, highlighting its efficiency and effectiveness.

- Google’s Pathways Language Model: This model demonstrates versatility by performing tasks across multiple domains, encompassing text, images, and robotic controls.

- OpenAI’s Dall-E: Dall-E stands out for its ability to generate images from textual descriptions, opening new possibilities in creative content generation.

- GatorTron by the University of Florida and Nvidia: Analyzing unstructured data from medical records, GatorTron holds promise in medical applications, facilitating data analysis and insights extraction.

- DeepMind’s Alphafold 2: Alphafold 2 addresses the complex challenge of protein folding, providing valuable insights for drug discovery and bioinformatics.

- AstraZeneca and Nvidia’s MegaMolBART: This implementation generates new drug candidates based on chemical structure data, offering potential breakthroughs in pharmaceutical research and development.

These transformer implementations represent the cutting edge of AI technology, driving advancements in language understanding, image generation, data analysis, and scientific discovery across diverse domains.