What is ChatGPT architecture?

Delving into the intricacies of ChatGPT’s technological prowess, it becomes imperative to illuminate the contributions of its architecture and algorithms to its commendable aptitude in natural language processing.

Refinement through Fine-tuning:

An indispensable facet of ChatGPT’s adaptability lies in fine-tuning. Following its initial pre-training on an extensive dataset, the model undergoes a refinement process on specific tasks to augment its efficacy. This entails training the model on a more confined, task-specific dataset to tailor its capabilities for applications such as question-answering or summarization. Fine-tuning serves as a mechanism enabling ChatGPT to dynamically adjust and excel in diverse specialized tasks, thereby showcasing its versatile nature.

Diverse Utilizations and Scenarios:

The spectrum of ChatGPT’s capabilities extends across an expansive array of natural language processing tasks, rendering it an invaluable asset in manifold applications. Some noteworthy applications encompass:

- Text Elaboration: ChatGPT adeptly expands and completes text based on provided prompts, proving instrumental in content generation, sentence completion, and idea expansion.

- Language Transmutation: Leveraging its profound comprehension of linguistic patterns, ChatGPT emerges as a resourceful tool for language translation tasks, bridging communication divides across distinct languages.

- Interrogative Response: With its adeptness in language modeling, ChatGPT excels in furnishing pertinent and coherent responses to user inquiries, establishing its efficacy in question-answering scenarios.

- Concise Summarization: The model’s capacity to grasp contextual intricacies empowers it to generate succinct and informative summaries, underscoring its value in summarizing extensive textual content.

- Interactive AI Conversations: ChatGPT seamlessly engages in dynamic and natural dialogues, positioning itself as a fitting solution for applications in customer service, interactive storytelling, and beyond.

Inherent Constraints:

While ChatGPT manifests remarkable proficiency in language processing, it is not devoid of limitations. Some of these encompass:

- Erroneous Responses: The model may sporadically produce seemingly plausible yet inaccurate or nonsensical responses, particularly in scenarios lacking a discernible truth source during reinforcement learning.

- Sensitivity to Linguistic Nuances: Minor alterations in question phrasing may yield disparate responses, underscoring the model’s sensitivity to subtle linguistic nuances.

- Prolific Verbosity: ChatGPT exhibits a proclivity for excessive verbosity, at times overutilizing specific phrases due to biases in the training data, resulting in protracted answers that may appear comprehensive.

- Absence of Clarification: Instead of seeking clarification for ambiguous queries, the model often conjectures the user’s intent, potentially leading to misinterpretations.

- Management of Unsafe Content: Despite efforts to mitigate inappropriate responses, the model may occasionally react to harmful instructions. OpenAI employs the Moderation API to address this concern, acknowledging the potential for false positives and negatives.

In summation, the amalgamation of ChatGPT’s architecture, algorithms, and training methodologies underpins its adaptability to diverse natural language processing tasks. Nonetheless, the elucidation of its constraints emphasizes the ongoing challenges in refining AI models for heightened accuracy and context-aware responsiveness. As technological advancements continue, addressing these limitations remains pivotal for unlocking the complete potential of conversational AI models like ChatGPT.

ChatGPT Architecture: Exploring the Architecture Behind the Language Mastermind

ChatGPT, the brainchild of OpenAI, has taken the world by storm with its ability to generate human-quality text and engage in meaningful conversations. But have you ever wondered what lies beneath the hood of this impressive language model? Today, we delve into the intricate architecture of ChatGPT, using examples, visuals, and clear explanations to shed light on its inner workings.

Foundation: The Transformer Reigns Supreme

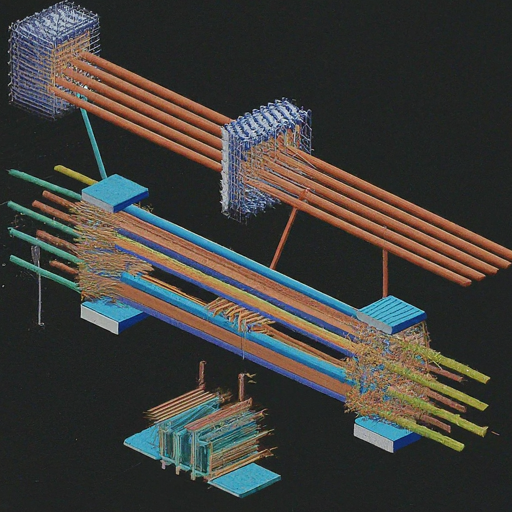

At the heart of ChatGPT lies the Transformer architecture, a powerful neural network renowned for its ability to process and understand long sequences of text. This architecture relies on two key mechanisms:

- Self-attention: Imagine each word in a sentence as an actor on a stage. Self-attention allows the model to understand how each word “pays attention” to other words in the sentence, capturing contextual relationships and dependencies.

- Encoder-decoder structure: Think of the encoder as the listener and the decoder as the speaker. The encoder processes the input text (listens), while the decoder uses this information to generate the output text (speaks).

Layers Upon Layers: Building Complexity

ChatGPT doesn’t rely on a single Transformer block; it stacks multiple layers upon each other, allowing it to learn more complex relationships and generate more nuanced text. Imagine each layer adding another level of understanding and refinement to the process.

This table illustrates the typical structure of a multi-layered Transformer:

| Layer | Description |

|---|---|

| Input Embedding | Converts words into numerical representations |

| Positional Encoding | Captures the order of words in the sequence |

| Self-Attention Layers | Processes relationships between words within and across layers |

| Feed-Forward Network | Adds non-linearity to the model |

| Output Layer | Generates the final text sequence |

Fine-tuning the Machine: Supervised Learning Takes Center Stage

While the Transformer architecture provides the foundation, ChatGPT’s true power lies in its fine-tuning process. The model is trained on massive amounts of text data, allowing it to learn the nuances of language and adapt to different writing styles and contexts.

Here’s an example: Imagine training ChatGPT on a dataset of Shakespearean plays. After fine-tuning, it would be able to generate text that mimics the Bard’s unique writing style and vocabulary.

Putting it All Together: Unveiling the Magic

Now, let’s bring these elements together to understand how ChatGPT generates text:

- Input: You provide a prompt or question.

- Tokenization: The input is broken down into individual words or sub-words (tokens).

- Embedding: Each token is converted into a numerical representation.

- Transformer processing: The stacked Transformer layers analyze the relationships between tokens, considering their meaning and position in the sequence.

- Decoding: The decoder layer, guided by the encoder’s understanding, generates the next word in the sequence, one by one.

- Output: The generated text unfolds, forming a response to your input.

Beyond the Basics: Exploring Advanced Techniques

ChatGPT employs additional techniques to enhance its performance:

- Residual connections: These connections allow the model to learn long-range dependencies and prevent information loss as it passes through layers.

- Layer normalization: This technique stabilizes the training process and helps the model converge faster.

- Knowledge distillation: This involves transferring knowledge from a larger, more powerful model to a smaller, faster model like ChatGPT.

Conclusion: Peeking into the Future of Language Models

By understanding the architecture of ChatGPT, we gain valuable insights into the inner workings of these powerful language models. As research progresses, we can expect even more advanced architectures and techniques to emerge, pushing the boundaries of what language models can achieve. Perhaps one day, we’ll be having seamless conversations with AI companions that rival human understanding.

Remember, this blog post provides a simplified overview of a complex topic. Feel free to delve deeper into research papers and technical resources for a more in-depth understanding of ChatGPT and its underlying architecture.

What is GPT?

Generative Pre-trained Transformers (GPT) are a series of neural network models based on the transformer architecture, which represent a significant advancement in artificial intelligence (AI). GPT models are capable of generating human-like text and content across various applications, including conversational agents like ChatGPT. These models have gained widespread adoption across industries due to their ability to automate tasks such as language translation, document summarization, content generation, and more.

The importance of GPT lies in its transformative impact on AI research and its practical applications. By leveraging massive datasets and sophisticated architectures, GPT models enable rapid and accurate generation of diverse content, leading to improvements in productivity and user experiences. Moreover, the development of GPT models has spurred research towards achieving artificial general intelligence, further enhancing their significance in the AI landscape.

GPT finds application in numerous use cases, including:

- Social media content creation: Marketers utilize GPT-powered tools to generate content such as videos, memes, and marketing copy for social media campaigns.

- Text style conversion: GPT models can transform text into different styles, catering to various professional or casual contexts.

- Code generation and learning: GPT assists in writing and understanding computer code, aiding both learners and experienced developers.

- Data analysis: GPT facilitates efficient compilation and analysis of large datasets, generating insights and reports for business analysts.

- Educational materials: Educators utilize GPT-based software to create learning materials such as quizzes, tutorials, and assessments.

- Voice assistants: GPT enables the development of intelligent interactive voice assistants capable of engaging in natural language conversations.

GPT operates through a transformer neural network architecture, which involves two main modules:

- Encoder: Processes input text by converting it into embeddings and capturing contextual information from the input sequence.

- Decoder: Uses the encoded representation to predict the desired output, leveraging self-attention mechanisms to generate accurate responses.

GPT-3, one of the most prominent versions of the GPT series, was trained with over 175 billion parameters on vast datasets sourced from various sources, including web texts, books, and Wikipedia. The training process involved both unsupervised and supervised learning techniques, culminating in a highly versatile and capable language model.

Applications leveraging GPT models span diverse domains, including customer feedback analysis, virtual reality interactions, and improved search experiences for help desk support. Amazon Web Services (AWS) offers solutions like Amazon Bedrock, which provides access to large language models like GPT-3 through APIs, enabling developers to build and scale generative AI applications seamlessly.