Overview

ChatGPT, a brainchild of OpenAI, stands as a testament to the organization’s pioneering strides in artificial intelligence research. At its core lies the transformative architecture, a watershed moment in natural language processing. This paradigm shift has catapulted ChatGPT into a realm of linguistic prowess, enabling it to generate text and respond to prompts with a level of precision and accuracy that mirrors human capabilities.

The foundation of ChatGPT rests upon the Transformer architecture, a neural network design tailored for processing sequential data, predominantly text. Unveiled in the 2017 paper “Attention is All You Need,” this architectural marvel pivots on self-attention mechanisms. These mechanisms endow the model with the ability to discern the significance of different segments within the input sequence, a crucial aspect in making predictions.

In essence, ChatGPT harnesses the power of Transformer architecture, marking a transformative chapter in the landscape of natural language processing. This architecture’s reliance on self-attention mechanisms underlines its capacity to decipher intricate relationships within input sequences, propelling ChatGPT into the echelons of advanced language models.

The following is a detailed explanation of the Transformer architecture:

Input Sequence (Inputs): The initial phase involves a sequence of tokens, such as words or sub-words, delineating the text input.

Input Embedding: The primary transformation unfolds by converting the input sequence into a matrix of vectors. Each vector corresponds to a token in the sequence, a process known as input embedding. This layer orchestrates the mapping of each token to a high-dimensional vector, encapsulating the semantic essence of the token.

Self-Attention Mechanism: A pivotal aspect, the self-attention mechanism facilitates the computation of relationships among various segments within the input sequence. It encompasses three key steps: query, key, and value computations, coupled with attention computation. In these computations, the input vectors transform into three distinct representations via linear transformations. During the attention computation step, the model calculates a weighted sum of the values. The weights hinge on the similarity between the query and key representations, ultimately culminating in a weighted sum that represents the output of the self-attention mechanism for each position in the sequence.

Multi-Head Self-Attention: The Transformer architecture integrates multi-head self-attention, empowering the model to concentrate on diverse segments of the input sequence and concurrently compute relationships between them. Each head undergoes query, key, and value computations with distinct linear transformations. The outputs are then concatenated and transformed into a novel representation.

Feedforward Network: The outcome of the multi-head self-attention mechanism traverses into a feedforward network, comprising interconnected layers and activation functions. This network effectuates a transformation of the representation, culminating in the final output.

Layer Normalization (Add & Norm Layer): In each layer of the Transformer architecture, activations undergo normalization via layer normalization. This process aids in stabilizing the training process and forestalling overfitting. A residual connection, followed by layer normalization, enhances training stability, making the model more amenable to training.

Positional Encoding: To encapsulate the sequential order of tokens in the input sequence, a positional encoding becomes an integral addition to the input embedding. This encoding materializes as a vector, representing the position of each token in the sequence.

Stacking Layers: The Transformer architecture attains depth through layer stacking, forming a deep neural network. This involves repeating the multi-head self-attention mechanism and feedforward network multiple times.

Output: The conclusive output from the Transformer manifests as a vector representation of the initial input sequence.

Embeddings are numerical representations of concepts converted to number sequences, making it easy for computers to understand the relationships between them. Since the initial launch of the OpenAI /embeddings endpoint, many applications have incorporated embeddings to personalize, recommend, and search content.

In the course of unsupervised pre-training, a language model undergoes a phase wherein it cultivates a diverse array of skills and hones pattern recognition abilities. Subsequently, these acquired proficiencies come into play during inference, facilitating the model’s rapid adaptation to or recognition of the intended task. The term “in-context learning” aptly characterizes the inner loop of this process, unfolding within the forward pass at each sequence.

It’s imperative to note that the sequences depicted in this diagram do not accurately represent the data encountered by the model during pre-training. Instead, they serve the purpose of illustrating that within a single sequence, there are instances of recurring sub-tasks embedded, showcasing the intricate nature of the learning process.

As models grow in size, their utilization of in-context information becomes more adept. The performance of in-context learning is exemplified in a straightforward task where the model is tasked with removing random symbols from a word, both in scenarios with and without a natural language task description. The more pronounced “in-context learning curves” observed in larger models indicate an enhanced proficiency and improved ability to execute the given task.

AI Language Models by Size:

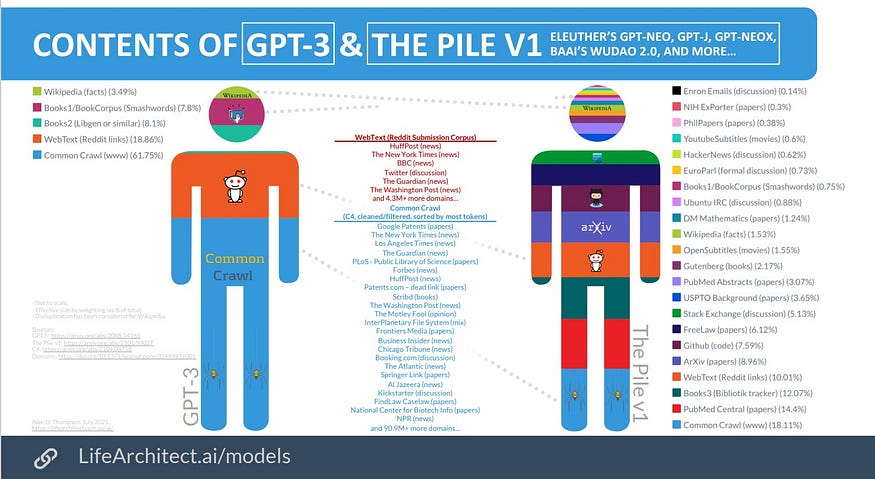

Data Sources / Contents:

List of key sources from where data is trained for GPT-3:

In contrast to traditional Natural Language Processing (NLP) models that heavily depend on manually crafted rules and labeled data, ChatGPT employs a neural network architecture coupled with unsupervised learning to generate responses. This approach enables the model to learn and generate responses without explicit guidance on what constitutes a correct response. This characteristic positions ChatGPT as a formidable tool for addressing a diverse array of conversational tasks.

The model undergoes training utilizing Reinforcement Learning from Human Feedback (RLHF), employing methodologies akin to those utilized in InstructGPT. However, there are subtle differences in the data collection setup. Initially, the model undergoes supervised fine-tuning, where human AI trainers engage in conversations, playing both the user and an AI assistant. These trainers have access to model-generated suggestions to aid them in formulating responses. Subsequently, this new dialogue dataset is amalgamated with the InstructGPT dataset, undergoing a transformation into a dialogue format, as illustrated in the diagram below.

Key Features and Capabilities:

- Text and response generation based on prompts.

- Conversational AI capabilities.

- Interactive storytelling.

- Content generation.

- Customer service and support.

Cool Applications with ChatGPT:

ChatGPT serves various creative purposes. Here are some examples:

- Generating Text and Responses: Ask ChatGPT to generate text or complete sentences based on prompts.

- Interactive Storytelling: Prompt ChatGPT to generate the next part of a story, creating an engaging narrative.

- Conversational AI: Utilize ChatGPT for real-time conversations, beneficial for customer service and interactive storytelling.

- Enhancing Search Engine Functionality: Strengthen search engines with an informative and interactive search agent, offering precise content tailored to user queries.

Innovative ChatGPT Tips and Tricks:

- Use creative prompts to enhance response quality and creativity.

- Build chatbots with personality for improved user experience.

- Generate diverse content types, including text, summaries, and poems.

- Fine-tune ChatGPT for language translation to enhance accuracy.

- Combine ChatGPT with other models for advanced AI applications.

- Experiment with different APIs for varied capabilities.

- Personalize the model through fine-tuning on specific datasets.

- Customize responses using control codes to adjust tone or style.

Examples of Prompts:

- As a Personal Chef: Provide dietary preferences for recipe suggestions.

- As a Math Teacher: Explain mathematical concepts in easy-to-understand terms.

- As a Blog Writer: Request a blog post to showcase product value.

- As a YouTuber: Seek a YouTube ad script for a relatable message.

- As a Software Developer: Specify web app requirements for architecture and code.

Exploring ChatGPT:

Discover ChatGPT’s capabilities at ChatGPT Exploration.

Limitations:

- ChatGPT may produce incorrect or nonsensical answers.

- Sensitivity to input phrasing variations.

- Excessive verbosity and phrase overuse.

- Limited ability to ask clarifying questions for ambiguous queries.

- Occasional response to inappropriate or biased requests.

Feedback Welcome:

OpenAI encourages user feedback to enhance and refine ChatGPT’s performance.